When a GGP server starts a new game and provides the GDL to the players, it then gives a grace period, known as meta-gaming time, to those players for them to perform any pre-game analysis they might wish to do, ahead of starting the actual move clock. Typically the allotted meta-gaming time will be 3-4 times the per-move time.

During the meta-gaming time players must at least set up any machinery they will need to process the moves. Typically this at least involves building a suitable state machine, and in Sancho's case that involves building and optimizing a propnet, as described in previous posts.

However, meta-gaming time is an opportunity to perform considerably more analysis, and use it to tune the way you play the game in any number of ways. Sancho goes through a number of distinct stages during meta-gaming, which are described in what follows.

Build and optimize the propnet - this has been discussed previously

Static dependency analysis

Once the propnet has been constructed, we then analyse it to determine 'dependency distances' between propositions. We define the dependency distance between proposition A and proposition B as the number of transitions that must be passed through, traversing backward the input graph of A, before B is encountered. Obviously this can be infinite, and the analysis works (at least loosely speaking) by using dynamic programming to (in essence) prevent any graph traversal having to cross the same edge twice. For the purposes of this analysis 'legal' props are considered to be virtual inputs to their corresponding 'does' props. The result is (logically - we currently do not actually represent it this way, though we may move to do so at some point) a matrix of distances, with the proposition of the network being the row and column indexes. The entry values in this matrix will be infinity or an integer less than the network size.

These dependencies can be useful in many subsequent analyses (some of which I will talk about below), but as a simple illustration consider the game of 'Breakthrough'. The game terminates when one player gets one of their pawns to the opposite side of the board from where they started. Considering the black role, whose pawns start on rows 1 and 2 of the board, this is equivalent of getting a pawn to the 8th row. If you look at the dependency distance matrix row for dependencies feeding the 'goal black 100' proposition (i.e. - a black win) what you will see is that the 8 base propositions corresponding to a black pawn on row 8 have a distance of 0, those on row 7 a distance of 1, and so on. Similarly moves of the form 'move x 7 y 8' will be at distance 1, 'move x 6 y 7' at distance 2, and so on. This leads to a natural candidate closeness-to-goal heuristic (which can be defined on states or moves), which is currently an active area of ongoing experimentation.

Factorization

Some games factorize into 2 or more independent sub-games (or in some cases semi-independent, which is a case I'll return to shortly). A canonical example is 'connect4 simultaneous', which is just 2 games of connect4 where each role plays alternately on the two boards (so in any given turn each role plays on one board and the boards alternate). Without factorization this has an approximate branching factor of 16 (neglecting full columns), so to explore the tree to depth N fully would require roughly 16^N states to be searched. However, if you actually treat it as 2 games, and search the two game trees independently you need only search 2*(8^N) nodes to completely search to depth N.

The ratio between these two is: 2^(N-1), so that's an exponential reduction of search effort in depth searched!

We can use the dependency distances calculated during dependency analysis to try to find factors in games. Basically this involves looking at the propositions which disjunctive factors of the goal and terminal propositions are dependent on. Taking the connect4 simultaneous example, the win goals (lines of 4 on either board) will boil down to an OR (disjunctive factor) of conditions which are each dependent directly (distance 0) on 4 base propositions (each instance of a line of four). In turn those base propositions turn out to be dependent (in this game) on the base propositions for the same column but with a lower row number (at greater distance), and to have no dependency at all on other columns. You then construct equivalence sets of propositions, such that any two propositions that are dependent on any other proposition which feed into the same disjunctive factor (OR) in the goal (or terminal) calculation then they belong to the same equivalence set.

With some fiddling for annoyingly written GDL (take a look at the way the terminal condition for C4 simultaneous is written and you'll probably see what I mean), and for 'control propositions' which are independent of moves, you wind up with either all propositions being in the same set (game is not factorizable), or with multiple sets, which are the factors. In C4 simultaneous you wind up with all the base props and moves for one board in one set, and those for the other in another.

At this point there are still two different cases to consider, which highlight the difference between 'truly factorizable' games, like connect4 simultaneous', and semi-factorizable games, of which 'Breakthrough w/walls' is the only example on Tiltyard that I am aware of. The difference is that in the pseudo-factorizable games you have more than one choice of move on a single turn in multiple factors. This means that if you search the factors independently you have an issue with deciding how to use the result to choose an overall move for the full game. This can be resolved by inserting pseudo-noop moves into the factors and weighing the relative score of the best move in one factor against the cost of having to play the pseudo-noop in the other(s).

Sancho plays the following games in a successfully factorized manner (at least these are the ones I am aware of and have specifically tested):

- Connect 4 simultaneous

- Dual Connect 4 (Stanford repository)

- Joint Connect 4 (Stanford repository)

- Chinook

- Breakthrough w/walls

- Dual Hamilton (Stanford repository)

- Joint Button and Lights (Stanford repository)

Note - the 'multiple xxx' games on the Stanford repository are not really factorized in a meaningful sense. It's more that they have a lot of ignorable junk in their generated base propositions and moves, which would pertain to factors if it weren't for the fact that you can eliminate them easily by simpler techniques.

Generation of candidate heuristics

After the factorization analysis is complete, we then generate candidate heuristics. I'm not going to go into detail about this in this post (there will be posts on heuristics later), but the key points are:

- Static analysis is used to produce possible heuristics for the game

- No attempt is made to determine (at this point) if they are likely to be any real use! For example, one might throw in focus and mobility heuristics here, since they are easily derivable form the GDL and/or propnet

- Crucially (for some games) one such heuristic is a measure that looks like it might reflect piece count in the game (and I stress 'might', we do no clever analysis to verify that it does, just a basic arity and set-size test that test that base propositions could be piece locations on a board of at least 2 dimensions)

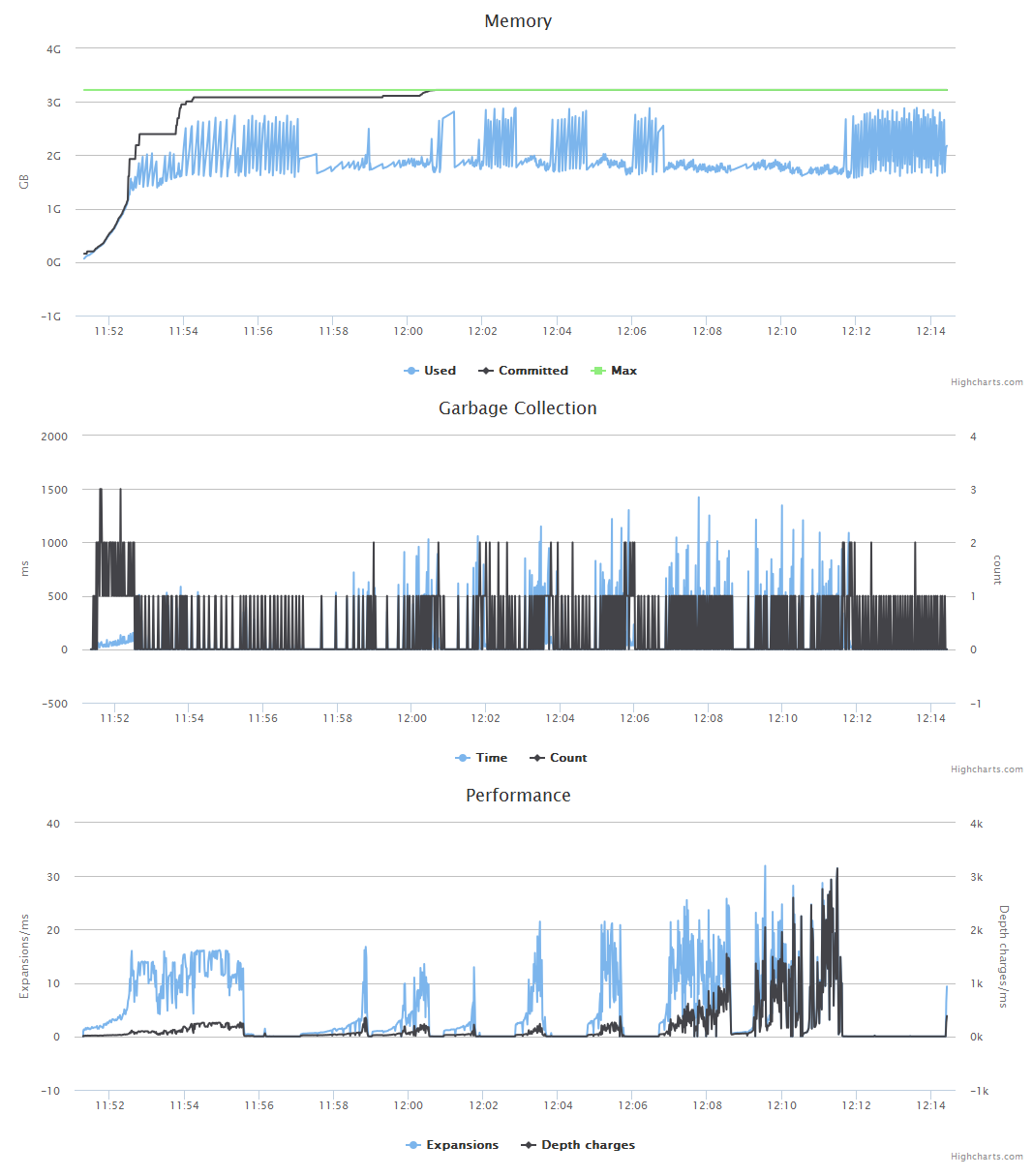

Game simulation to gather statistics

We then spend half the remaining meta-gaming time playing random games (playouts from the initial state), and gathering statistics, such as:

- Distribution of game length

- Average branching factor

- Presence of simultaneous moves (easily determined statically, but for historical reasons we do it dynamically)

- Effectiveness of 'greedy' playout processing wherein the playouts are not totally random, but actively perform a 1-level search at each step to find/avoid forced/unforced win/losses (this makes playouts much more expensive, and so we get fewer, but in some games it is highly effective at improving the convergence rate of MCTS)

- Correlation of heuristic signals from each of the candidate heuristics with actual outcomes

Tuning of search parameters

Based on the results of simulation we then tune a number of search parameters, which we will use during MCTS expansion as we play the game. Examples of things tuned are:

- Enablement of greedy playouts (typically this costs up to an order of magnitude to the simulation rate, so we only enable it where it was found to be very effective relative to its cost, which is itself largely dependent ion the game's branching factor)

- Exploration bias - i.e. - how much weight to give the UCB exploration term in relation to its exploitation term. Note that this can have a very significant impact indeed on play quality, and if you seek to tune anything (even to find a good fixed average for use in all games) this is the one to start with.

- How to perform node expansion - specifically a large cost in MCTS node expansion is evaluating the child states for terminality. It is possible to structure things to defer this until the child in question is itself expanded, but this has a cost in convergence rate (if you propagate the consequences of terminality upwards assuming rational play), and is usually a bad idea. However, if you know the game is unlikely to terminate at a certain node, based on a simplistic analysis of things like the game length distribution, you can decide whether or not to perform this check dynamically, depending on the node depth in the game tree.

- How to handle 'complete' nodes (that is to say, those whose value is known with certainty, of which terminal nodes are the canonical example). In particular these need to be handled differently (at least if your tree edges represent individual role decisions as ours do, rather than joint moves) in simultaneous move games.

Crystallization of heuristics

Based on the correlation of each candidate heuristic with the simulated outcomes sampled above, we then enable those heuristics which appear to work in the game in question. That set will then be used during move search. In particular we are able to identify piece capture in games like checkers and breakthrough in this way, and up-weight their selection during MCTS expansion. If you've ever watched a pure MCTS player play Breakthrough and wondered why it quite frequently seems to just suicide its pieces when they are attacked, you'll appreciate why this is effective.

Make an early start on first turn search

If we have any meta-gaming remaining after all of the above, we start the game search thread so it can be getting on with considering the first move. While it is doing that we yield on the meta-game request thread until a couple of seconds before the timeout, which ensures that the server does not curtail meta-gaming early (after all, with most opponents we are productively searching at this point, and they are twiddling their thumbs, so the longer we can prolong this the better!)

Summary

Your meta-gaming time is a valuable resource. Use it!